Building an ML Processor using CFU Playground (Part 2)

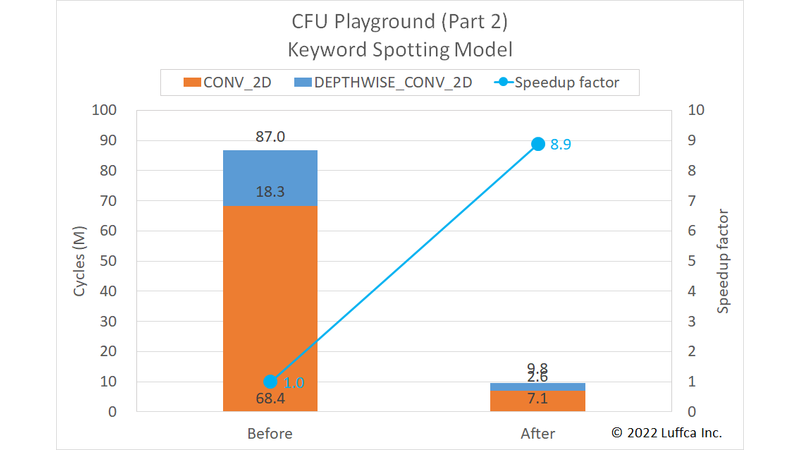

We have built a machine learning (ML) processor on an Arty A7-35T using CFU Playground. In Part 2, we have accelerated the inference of the Keyword Spotting model by 8.9 times.

CFU is an abbreviation for Custom Function Unit, and it is a mechanism to add hardware for RISC-V custom instructions in LiteX/VexRiscv.

Click here for related articles.

- Part 1: Person Detection int8 model

- Part 2: Keyword Spotting model (this article)

- Part 3: MobileNetV2 model

Note: Part 2 was updated on 8/21/2022 to reflect the results of Part 3.

CFU Playground

CFU Playground is a framework for tuning hardware (actually FPGA gateware) and software to accelerate the ML model of Google’s TensorFlow Lite for Microcontrollers (TFLM, tflite-micro).

See Part 1 article for more details.

Keyword Spotting Model

The Keyword Spotting model, called kws in CFU Playground, is one of the models added to TFLM from MLPerf Tiny Deep Learning Benchmarks.

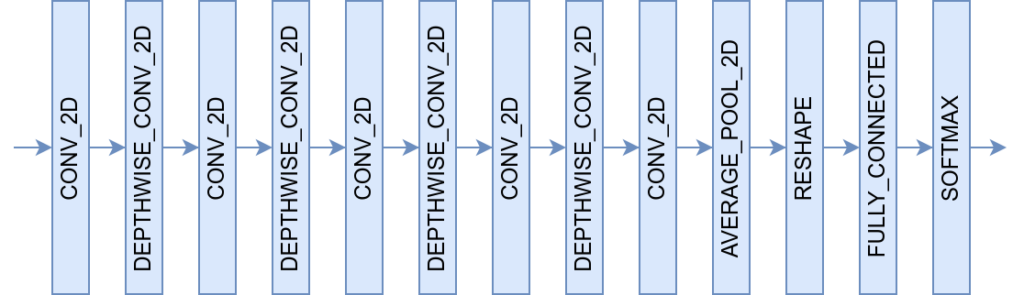

As shown in the figure below, this model is a 13-layer model consisting of 5-layer CONV_2D, 4-layer DEPTHWISE_CONV_2D (hereinafter dw_conv), etc.

The CONV_2D from the third layer onward of this model operates as a 1×1 convolution (hereinafter 1x1_conv).

multimodel_accel project

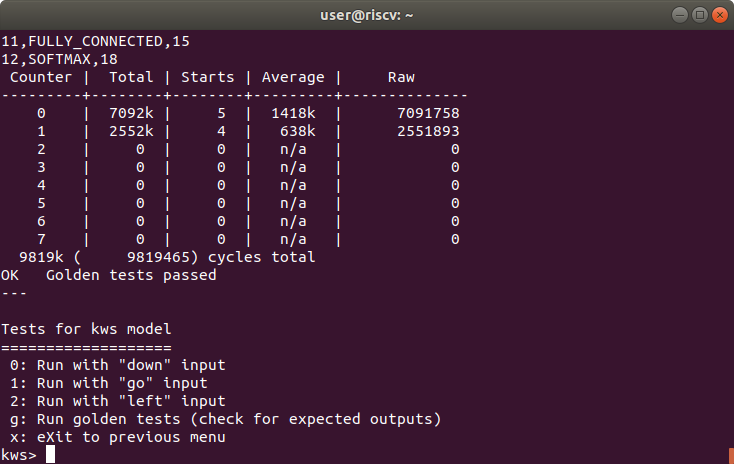

Result of golden tests for kws model

The multimodel_accel project is an in-house project that aims to accelerate multiple ML models, while most CFU Playground projects are model-specific to accelerate only one ML model.

As a background of the above, since the kws_micro_accel project of CFU Playground is specialized for the kws model, there is no effect of speeding up to other models such as the Person Detection int 8 (hereinafter pdti8) model introduced in the Part 1 article.

Introducing the results first, as shown in the featured image and the table below, the total cycles of the kws model has been reduced from 87.0M to 9.8M, achieving a 8.9x speedup.

| Keyword Spotting Model | Cycles | Speedup factor |

|

|---|---|---|---|

| Before | After | ||

CONV_2D |

68.4M | 7.1M | 9.6 |

DEPTHWISE_CONV_2D |

18.3M | 2.6M | 7.2 |

| Total | 87.0M | 9.8M | 8.9 |

The operating frequency of the Gateware for Arty A7-35T is 100MHz, so the latency is 98ms.

Since the latency of Arm Cortex-M4 with an operating frequency of 120MHz in MLPerf Tiny v0.5 inference benchmarks is 181.92ms, the above result is 1.8 times faster even by simple comparison, and 2.2 times faster when the operating frequency is normalized.

Software Specialization & CFU Optimization

The multimodel_accel project uses 1x1_conv and dw_conv, which are specialized and optimized for both kws and pdti8 models.

However, since the ratio of the processing time of the first layer of the kws model has increased with the speedup of other layers, kws_conv specialized for the first layer of the kws model has been added.

In the pdti8 model with all 31 layers, the first layer is not specialized or optimized, but in the kws model with all 13 layers, the influence of the first layer tends to be strong.

Summary

We have built an ML processor on an Arty A7-35T using CFU Playground. The ML processor can infer the Keyword Spotting model 8.9 times faster and run in 9.8M cycles.